I wasn’t trying to uncover cultural bias. I was just shopping for furniture.

All I did was make a simple shopping query: “Tall chest of drawers.” Sure enough, Google’s new AI mode responded with mild generative content telling me bland stuff like the role of drawers and that they are made of "a variety of materials like wood, metal, and engineered wood, and are often found in bedrooms, living rooms, and hallways."

Whatevs. But then I wondered what would happen if I asked AI Mode to tackle topics something less anodyne?

To test, I searched “bicycle,” and AI provided a basic definition: “A human-powered or motor-assisted vehicle with two wheels.” It then listed common types (road, mountain, hybrid) and related health benefits (cardiovascular fitness, muscle strength, mental well-being).

Below that definition, unprompted, was a section titled “Bicycle Safety” that was definitely not neutral:

To ensure a safe ride, consider these tips:

- Wear a Helmet: A properly fitted helmet is crucial for protecting your head in case of a crash.

- Be Visible: Wear bright clothing during the day and use reflective gear and lights at night or when visibility is poor.

- Follow Traffic Laws: Obey traffic signs, signals, and road markings.

- Ride with the Flow of Traffic: Ride on the right side of the road in the same direction as traffic.

- Use Bike Lanes When Available: Bike lanes provide a safer space for cyclists.

- Be Predictable: Ride in a straight line, avoid swerving, and signal your turns.

- Be Aware of Surroundings: Look out for hazards, pedestrians, and other vehicles.

- Maintain Your Bike: Ensure brakes are functioning correctly, tires are properly inflated, and all parts are in good working order.

In short, the response of the AI was to cast cyclists as vulnerable, erratic objects in need of management and self-discipline.

But when I searched “car,” I got something different. Yes, there was first the neutral history lesson and taxonomy of vehicle types (though it included the eyebrow-raising assertion that SUVs are “suitable for both city and off-road driving”), but then the AI, again unprompted, discussed the deleterious environmental impact of cars, including this sentence:

“Mitigation efforts include using cleaner vehicles like hybrids or electric cars, driving efficiently, and implementing policies and technologies such as emission standards and advancements in electric vehicles.”

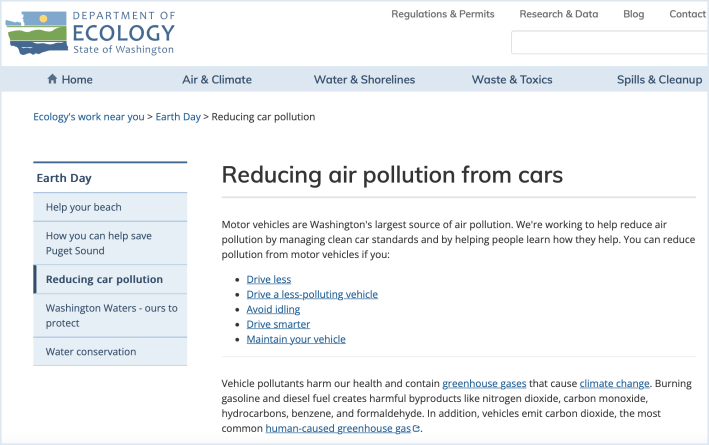

That last sentence was attributed to the Washington State Department of Ecology.

But that didn't seem complete, so I dug deeper on that state website — and discovered stuff all the stuff that Google AI ignored:

- Drive less

- Drive a less-polluting vehicle

- Avoid idling

- Drive smarter

- Maintain your vehicle

So Google’s AI not only omitted the lead point — drive less — but inverted the message: Washington State’s call to reduce car use became, in AI Mode, a plug for electric vehicles and driving “efficiently.”

So I went back to look deeper into AI's bicycle safety information, which had been sourced from the National Highway Traffic Safety Administration (not exactly an impartial source in a car-centric federal transportation system). Again, AI ignored something pretty damn crucial from the NHTSA page:

It said, "1,166 bicyclists were killed in traffic crashes in 2023."

And NHTSA includes an entire page on defensive driving — not for cyclists, but for drivers:

“Be focused and alert to the road and all traffic around you; anticipate what others may do, before they do it. This is defensive driving — the quicker you notice a potential conflict, the quicker you can act to avoid a potential crash. … No texting, listening to music or using anything that distracts you by taking your eyes and ears or your mind off the road and traffic.”

None of that shows up in Google AI’s summary. No reminder that drivers are both the problem and the solution.

This isn’t just about style or semantics. It reflects how generative AI — trained on decades of car-centric advertising, government communications, and cultural defaults — reproduces the same systemic bias we see in street design:

Cars are the norm.

Bicycles are the exception.

Safety is the responsibility of the most-vulnerable road users, not the most-dangerous ones.

And that’s the danger lurking in every AI search: the self-perpetuating large language messaging that bicycles are hazards and cars are normal, that public warnings can be reframed as corporate gloss.

We need tools to build better cities. So clearly, we need better algorithms, too.